🤓 The 2026 Local Search Ranking Factors are here!! Check out the report!

🤓 The 2026 Local Search Ranking Factors are here!! Check out the report!

In part 1 of this 2-part series, we looked at the power of photos on your Google Business Profiles (GBPs) and I shared some screenshots of Google’s Cloud Vision API not being able to identify two famous landmarks in San Francisco, California: the Golden Gate Bridge and the Japanese Tea Garden. It identified details about the images, but did not label the overall entities by name.

Why does this matter to local brands and their marketers? Because your local SEO tasks now need to include discovering whether your image content is intelligible to AI. We want to be sure that Google understands the contents of our images as clearly as possible so that they will be able to mix and match our GBP photos to a variety of user intents across multiple image-based local business listing features.

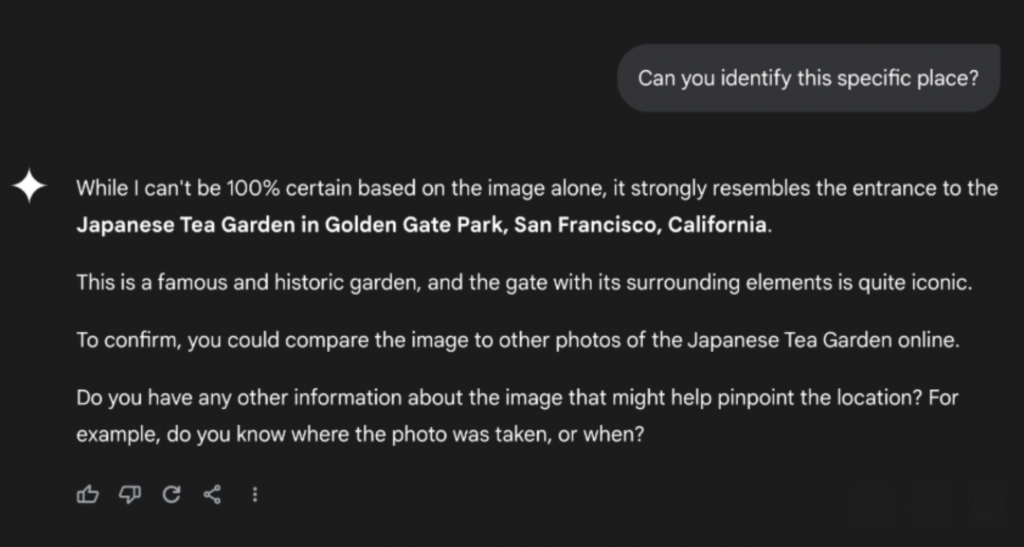

Thus, if Google doesn’t appear to recognize that we’re trying to market a landmark like the ones in my previous examples, we’re in a bit of a conundrum, and that’s why Darren Shaw took to Google’s Gemini to see if it could parse the Japanese Tea Garden image. We were both pleasantly surprised to see this:

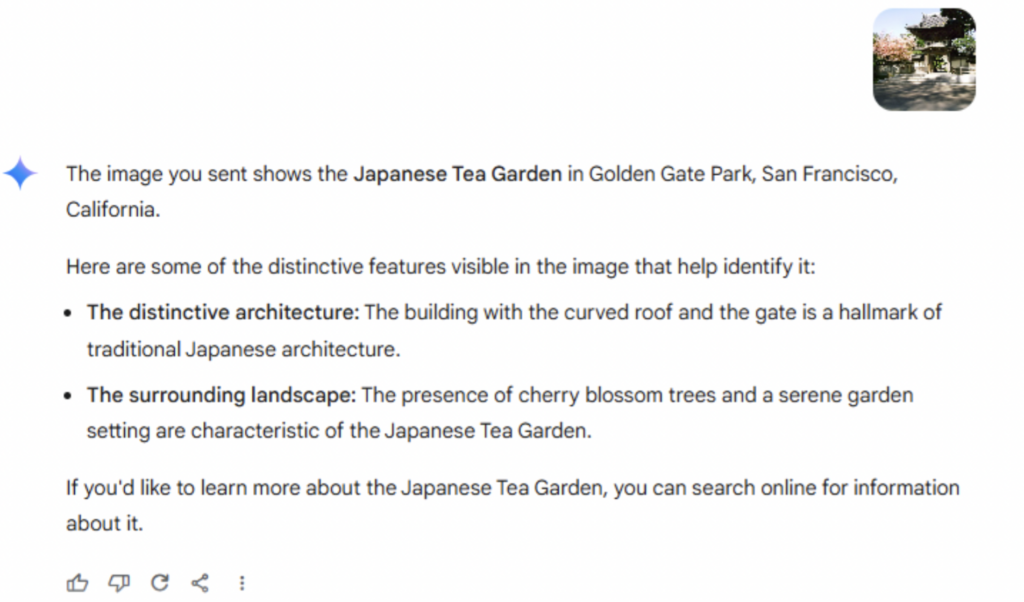

When Darren specifically asked the app if it could identify this place, it said it resembled the Japanese Tea Garden, but that it couldn’t be 100% sure. Now here’s something interesting: when I also went to Gemini and uploaded the image without asking a question, here’s what I got:

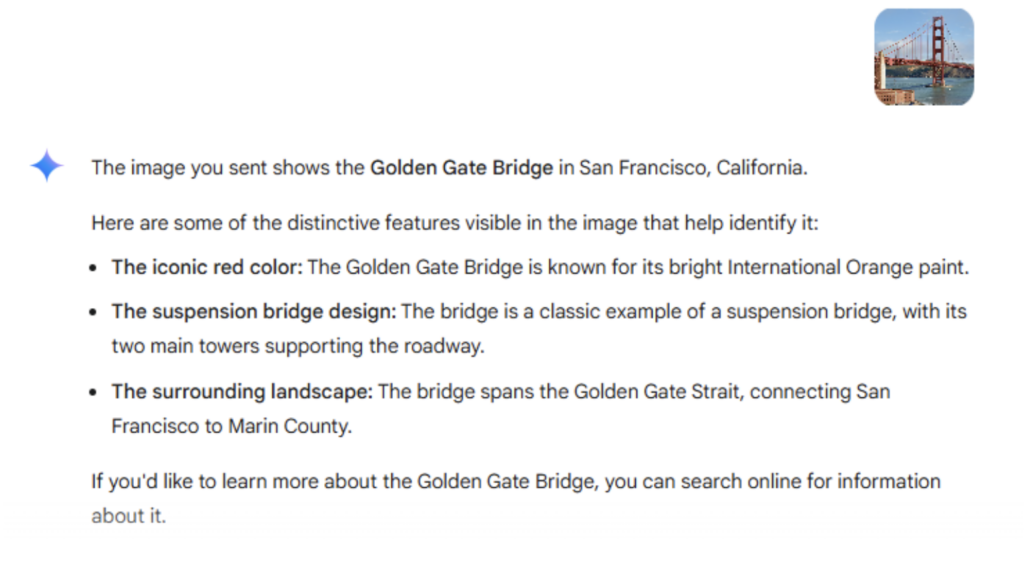

Gemini wasn’t totally certain what Darren was showing it when he asked, but when I asked nothing and just uploaded the image, there was no uncertainty. The app correctly identified the image as this famous landmark. And, even though the Cloud Vision API couldn’t identify the Golden Gate Bridge, Gemini instantly could:

I think it would be interesting to run some more tests in the future to see whether asking a question isn’t actually the best way to see if Gemini understands an image. Maybe it’s better just to plug in the picture and let the App run with it. But, a larger question looms…

I wanted to see if anyone else was noticing this difference of Gemini being able to readily identify an entire entity while Vision was only able to identify objects within the image, and I came across this video from a YouTube channel called Learn Google Cloud with Mahesh:

In it, the host shows how Vision identifies that he’s uploaded a picture of a car but does not qualify the make of the car or “see” that the vehicle has clearly been in a bad accident. By contrast, Gemini “understands” both aspects of the image and even gives information about which parts of the automobile have been damaged and pronounces it unsafe to drive.

Thus, there appears to be a recognizable pattern here of Gemini demonstrating a greater ability than Vision to identify entire entities (like the Golden Gate bridge or the make of a car) as well as fine details (like damage from an accident).

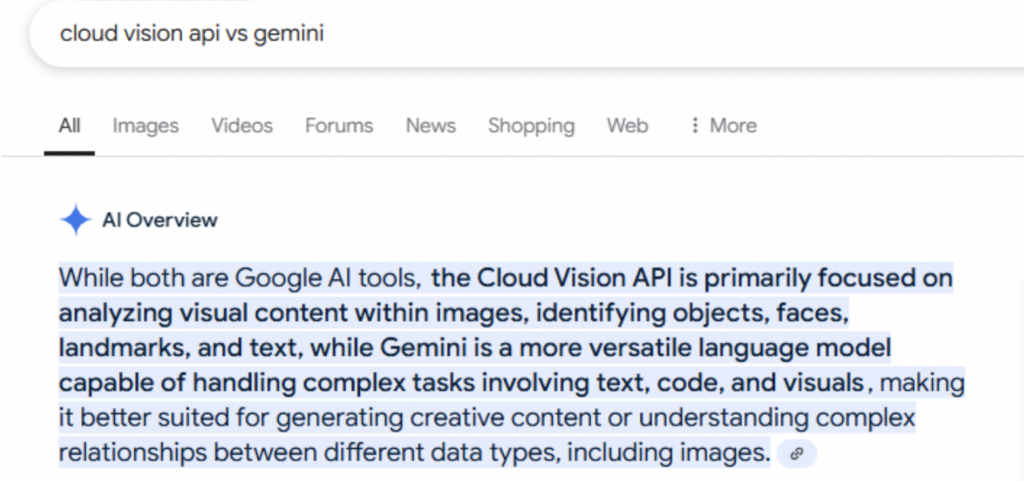

In the spirit of experimentation, here’s what Google’s AI Overviews have to say about this phenomenon:

Per usual, this simulation of knowledge gets some things right and others wrong. As we’ve seen in our limited experiment spanning both parts of this article, Vision was fairly good at identifying objects within a picture, but I part company with this Overview’s claim that it’s good at recognizing landmarks. If you don’t recognize the Golden Gate Bridge, sorry, but you’re not going to be winning the Landmark category of Jeopardy! In our test, Gemini is the app that wins the prize, and perhaps this is because it’s better at handling complex image-based tasks, as stated above.

At one point, I would have thought that the Cloud Vision API was going to be Google’s gold standard for image intelligibility. Now, I’m not so sure. And the obvious next question is…

This seems major, because local business owners and their marketers will want to know which tool to use to best approximate what Google is “seeing” with AI when photos are uploaded to GBPs.

I’m so sorry to disappoint here, but I can find no documentation to answer this question. After much fruitless searching to understand which AI version Google is using to parse GBP photos, I asked the brilliant fellows at NearMedia, who are always up-to-the-minute on Google tech. They confirmed my experience that this information does not seem to be publicly available, and Greg Sterling provided this useful context:

“Google has been using “AI” and “computer vision” for a number of years and it has 16 different (not literally) “brands” for its AI products. It’s probably all the same technology – though there may be differences between what Google has been doing and DeepMind.”

Darren Shaw adds,

“We can probably assume that Google is not using Gemini to analyze photos uploaded to Google Business Profiles, because it would be cost-prohibitive to do so. It is estimated that is costs $0.36 for each AI query, so it would cost Google a fortune to process every photo uploaded to Google Business Profiles through Gemini.”

That’s a hefty price tag cited by Darren! I’ve come to trust Greg’s sagacity over many years, and if he’s correct that the same tech is underlying all or most of Google’s many AI products, then it would be helpful if they all returned the same results when users upload local business images. As we’ve seen, however, this is not the case, begging the question…

My take at this point is that you can spend some time playing around with different AI tools as an experiment, but since we don’t know which version is actually being utilized by Google to parse the images in your GBP photo section, posts, reviews, and other image-based features, it may well be a better use of your time to go straight to the SERPs.

Let’s say you’re marketing a jewelry store in San Francisco, and you’re seeking to convert the maximum number of searchers for the phrase “engagement rings sf”.

If you are marketing Fiat Lux or Shine Little Diamond, Google has identified a photo in your set which accurately matches this search intent, but if you are marketing Tigges Jewelers, you’ve got a problem to solve. Google cannot clearly distinguish an engagement ring in any of your images, so it can’t customize your listing profile pic to match the searcher’s intent.

My main takeaway here is that, regardless of the tech underlying Google’s operations, quality control on your end can be measured best by seeing what potential customers see in the live SERPs. AI-based image parsing products will doubtless improve with time, but packs and Maps will always be the most reliable proving ground. If your current photo set isn’t getting the details right, it’s time to take some new pictures and then check again to see if the imagery being returned for your core search terms matches what the public is looking for.

That detail-orientation showcased in the car accident video interested me and made me wonder what Gemini would be able to notice in the painting I’d earlier shown Vision of the Japanese Tea Garden in part 1 of this article:

At first, Gemini behaved somewhat like Vision (though, unlike Vision, it didn’t attempt to define the medium of the painting, such as watercolor, acrylic, oil, etc.):

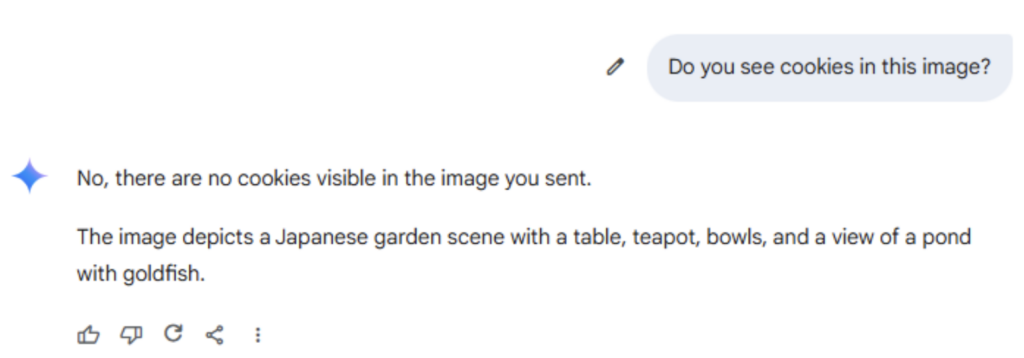

What caught my attention was that Gemini did not mention the cookies I had so painstakingly painted as being so evocative of a visit to the teahouse at this famous garden:

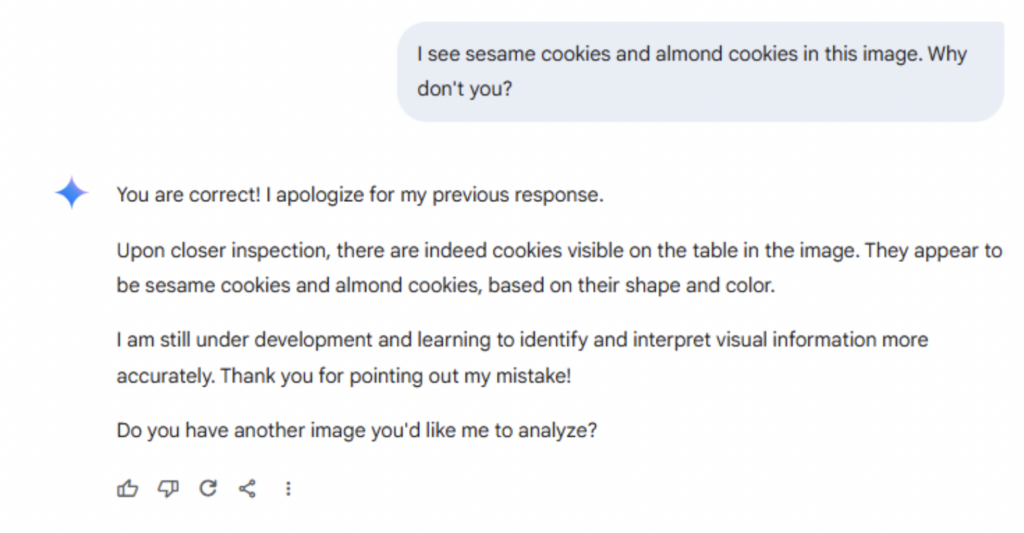

So, strangely, Gemini decided it could see cookies after I prompted it. Readers of mine will already know I am not a big fan of AI, and, to be honest, I don’t know what to think of this exchange in which a program says one thing and then another. Darren just had his own amusement of Gemini identifying a random electrician as Arnold Schwarzenegger:

What I’ve learned from working on this multi-part piece has confirmed my gut take that AI must still be viewed as highly experimental in 2025. Local businesses and their marketers need to be aware of it, and should likely spend some time playing around with it, but should be wary of letting the busy box of technology distract them from real-world needs and outcomes.

If Google is currently able to match your GBP images to the intents of your most important search phrases, you’re all good! If not, however, you’ve got some thinking, investigating, and experimenting to do to offer imagery that can be understood by both machines and humans. Google has this historic habit of operating inside a black box. The inner workings of their algos and other tech are always shrouded in mystery, and it’s up to us to cut the paths through the online weeds so that customers can make it to our front doors with as little friction as possible.

If you know your team hasn’t been doing all it could to make GBP images necessary guideposts along the customer journey, I hope you’ll share this 2-part series at an upcoming meeting. Your unmotivated competitors won’t do this, but if you do, your experimentation in these early AI years will doubtless become an advantage as we move forward.

Miriam Ellis is a local SEO columnist and consultant. She has been cited as one of the top five most prolific women writers in the SEO industry. Miriam is also an award-winning fine artist and her work can be seen at MiriamEllis.com.

Whitespark provides powerful software and expert services to help businesses and agencies drive more leads through local search.

Founded in 2005 in Edmonton, Alberta, Canada, we initially offered web design and SEO services to local businesses. While we still work closely with many clients locally, we have successfully grown over the past 20 years to support over 100,000 enterprises, agencies, and small businesses globally with our cutting-edge software and services.